Is it advisable to not have health insurance in the US?

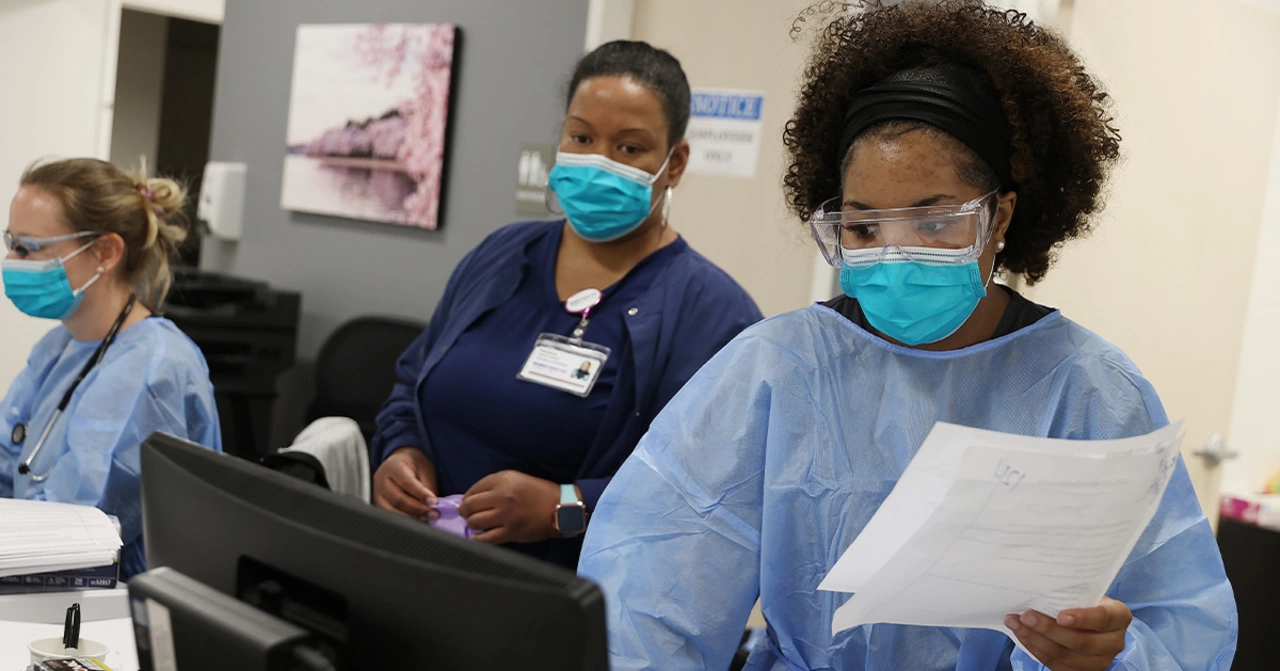

From my perspective, not having health insurance in the US is a risky move. Medical costs can be astronomically high, and without insurance, you could find yourself in serious financial trouble if a health issue arises. Health insurance also often covers preventative care, which can help diagnose problems early before they become major issues. Furthermore, under the Affordable Care Act, you could be fined for not having health insurance, adding an additional cost. In short, the potential risks and costs associated with not having health insurance make it an advisable necessity.

Continue Reading